Imagine waking up and finding dozens of orders routed to suppliers, tracking numbers updated, inventory synced, and price mark-ups applied, all while you slept.

That’s the power of e-commerce automation, the systemised backbone of a modern e-commerce business built for scale, not just survival.

On the other side, you have the trusted model of hiring human help, skilled virtual assistants (VAs) processing orders, updating product listings, and fielding customer queries.

Which is the smarter investment for a store owner of a dropshipping operation on Shopify?

Should you pour budget into the latest automation stack, or build a team of remote assistants who “Do the work for me”?

In this blog, we’ll unpack both sides: the fully automated route of Shopify dropshipping automation, and the human-powered route of virtual assistants for your Shopify store.

Key Takeaways

- A robust Shopify dropship automation strategy can drastically reduce manual workflows and unlock scalability.

- Virtual assistants offer flexibility and human judgment but may become a bottleneck at high volume.

- Cost-effectiveness of automation vs VAs depends heavily on volume, margin, and complexity of your dropshipping business.

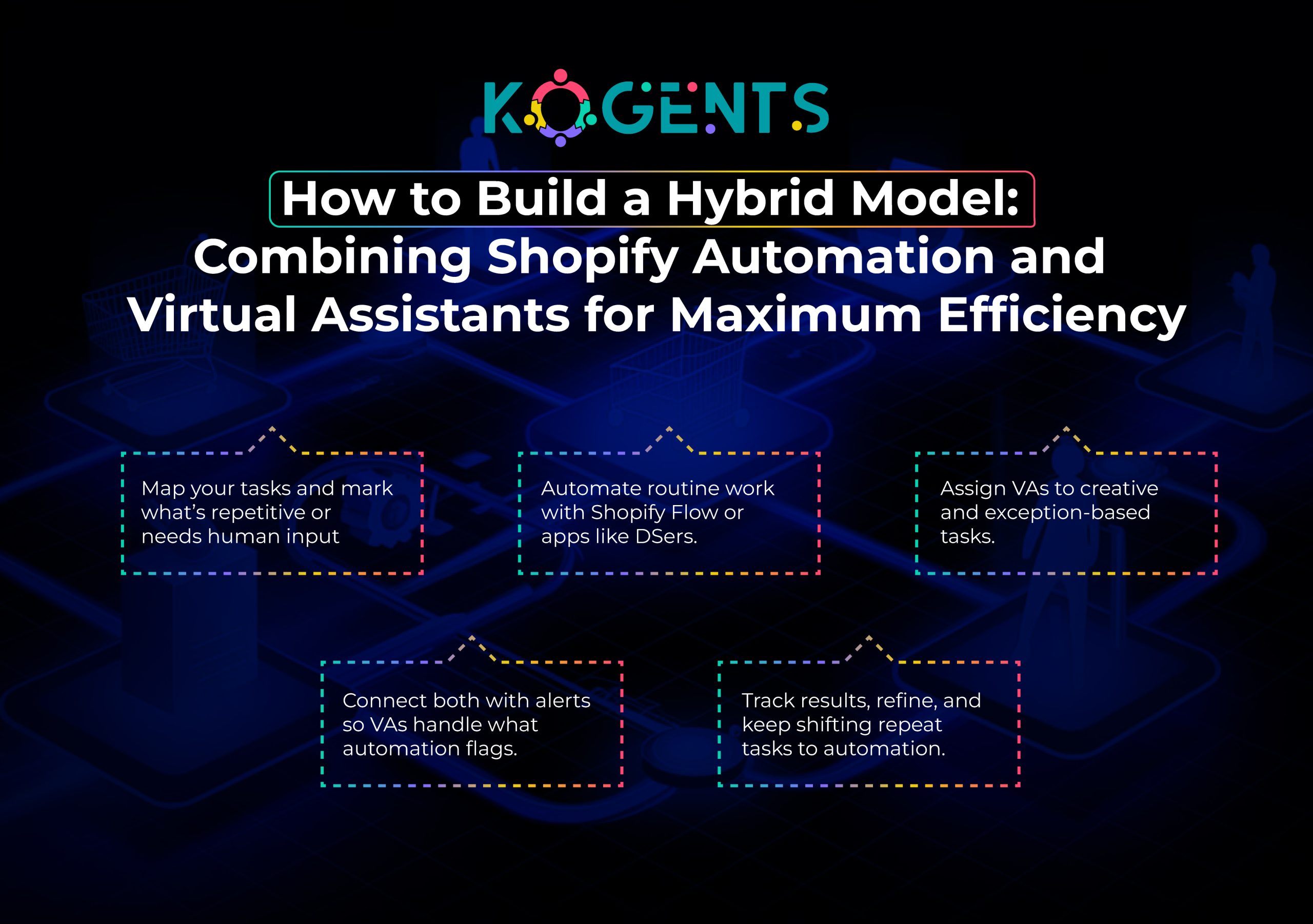

- The best Shopify AI chatbot model is often a hybrid: automation for repetitive tasks + VAs for strategic or complex work.

- Failing to align your investment (humans or software) with your stage, niche, and supplier ecosystem is the biggest risk.

Defining the Stakes: What is Shopify Flow, and what are Virtual Assistants in Dropshipping?

When we talk about Shopify dropshipping automation, we refer to the ecosystem of apps, triggers, and workflows that allow routine tasks of a dropshipping store, product import, inventory sync, order routing, tracking updates, and pricing adjustments to proceed without human intervention.

In contrast, virtual assistants (VAs) in the context of a dropshipping store are remote human team members or freelancers who perform tasks like product research, listing uploads, managing customer messages, handling returns, coordinating with suppliers, essentially everything a human can handle but outsourced.

They offer judgment, flexibility, troubleshooting, and human touch.

The central question: As a store owner on Shopify, should you invest in a Shopify virtual assistant and automate workflows, or invest in human help?

Deep Dive: AutoDS, DSers, and the Rise of Automated Shopify Dropshipping

Let’s unpack what automation can do:

- With automated tools, when a buyer places an order, the order is automatically routed to the correct dropshipping supplier.

Example: “When a customer places an order on your website, an app can automatically route the order to your dropshipping supplier, then in this way your supplier starts fulfillment as soon as possible.”

- Inventory across channels can be synced automatically, and tools like Duoplane show vendor inventory feeds so out-of-stock items are avoided.

- Pricing and markup automation: You can auto-apply markups, enforce minimum margins, and maintain MAP compliance.

- Order tracking updates: Apps like AutoDS auto-update tracking numbers, streamlining the post-purchase experience.

- Importing products: Tools can pull products from AliExpress, Amazon, and Alibaba into your Shopify store automatically.

The global e-commerce market is projected to surpass US$6.3 trillion in 2025, which supports the case for scaling automation.

The Case for Virtual Assistants in Dropshipping: When Humans Still Matter?

While Shopify automation tools are powerful, it’s not a silver bullet. Virtual assistants bring human judgment, adaptability, and error-handling that many automation systems either lack or struggle with.

For example:

- The role of a remote VA: It can step in when suppliers switch their terms, when a product listing is flagged, or when a customer complaint requires nuance.

- Cost savings: One article says VAs can save businesses 30–50% in operating costs compared to full-time in-house staff, and in some cases up to 78%.

- Flexibility: Many VAs are paid hourly or per task, enabling e-commerce brands to scale support up or down based on demand.

Head-to-Head Comparison: Automated Shopify Dropshipping vs Virtual Assistants

Here’s a detailed comparison, followed by a summary table.

Cost

- Automation: Typically a fixed subscription fee for apps + implementation costs. Once set up, the marginal cost per order is very low.

- VAs: Hourly or task-based costs; as order volume grows, human hours increase linearly. Upfront training and management overhead.

- Observation: If your volume is low (< a few dozen orders/day), then VAs may be cost-effective; if you scale to hundreds+ orders daily, automation tends to win.

Speed & Scalability

- Automation: Once workflows are live, the system handles orders 24/7, with instant routing, real-time updates.

- VAs: Human speed; risk of delays, time zones, fatigue, errors. Scaling often means hiring more VAs.

- So for scaling fast or high-volume stores, automation has a big edge.

Accuracy & Reliability

- Automation: Rules are consistent, less prone to human fatigue. But rigid, if the supplier data feed changes, the system may break.

- VAs: Can adapt, handle exceptions, and think on their feet. But prone to human error, distraction, and training issues.

- A hybrid model often gives the best reliability: automation for bulk, human oversight for exceptions.

Flexibility & Adaptability

- Automation: Great for repeatable workflows, but customizing for edge cases may require dev effort.

- VAs: Flexible, can handle new tasks, unique situations, product research, and creative work.

- So when your business requires creative, strategic tasks, VAs shine. For the repeatable operational tasks, automation wins.

Risk Management

- Automation: You risk supplier feed changes breaking workflows, app bugs, and vendor lock-in.

- VAs: Risk of attrition, training, oversight, inconsistent quality, timezone issues.

- Mitigation: Good to build fallback plans for whichever route you choose.

Long-Term Strategy

- Automation: Builds an asset: a scalable backend. Once implemented, you can scale globally.

- VAs: More variable; high reliance on human labour may hinder scaling beyond a certain volume.

| Soft Reminder: If you plan to grow, automation builds long-term leverage; VAs buy you time, human flexibility. |

Brand & Customer Experience

- Automation: Good for operations but may lack a human voice in customer service or brand nuance.

- VAs: Can deliver personal touch, brand voice, strategic input, and creative product curation.

- So for a premium brand, a high-touch customer experience, VAs still matter. For commodity dropshipping stores, automation may suffice.

Summary Table

| Criteria | Automation (Shopify dropshipping automation) | Virtual Assistants (VAs for Shopify store) |

| Up-front cost | Medium (app setup + training) | Low to medium (hiring/training costs) |

| Marginal cost per order | Very low once set up | Higher costs scale with orders |

| Scalability | Excellent, can handle high volume | Limited by human hours |

| Speed & real-time handling | Excellent, instant routing, syncing | Slower, human lag, timezone, fatigue |

| Flexibility & creative tasks | Moderate, best for repeatable workflows | High, good for new tasks, strategy, exceptions |

| Risk of human error | Low | Higher |

| Risk of automation breakage | Moderate, feeds or app changes can break flows | Lower, humans can adapt |

| Brand experience & customer touch | Operationally solid, less human | More personal, brand-centric |

| Long-term scalability asset | High, builds infrastructure | Lower, human labour is harder to scale indefinitely |

Case Studies

1. Automation-First Case Study

In a study by KEMB GmbH, a client used AI and automation for their Shopify dropshipping operations.

‘’We used Python and OpenAI algorithms to optimise a Shopify store with thousands of products, from product categorisation to automating dropshipping processes.”

Key Improvements: product import, categorisation, order routing, tracking updates, all handled without requiring human intervention.

The outcome: dramatically reduced manual workload, faster time-to-market for new lines, improved reliability.

2. VA-Heavy Case Study

From a 2025 survey by VA Masters: “Clients using Filipino virtual assistants achieved on average 75% cost savings and 95% satisfaction.”

One e-commerce merchant noted: “Our VA handles inventory, processes orders, and manages customer communications while I launch two new product lines.”

This highlights the human-VA model: great for growing store operations, product launches, and customer responsiveness, where the human touch mattered.

Additional Insight: Automation Risk Heatmap

| Risk Factor | Severity | Frequency | Risk |

| Supplier feed failures | High | Medium | High |

| API throttling/outages | Medium | Medium | Medium |

| Variation & SKU mismatches | High | Medium | High |

| App conflicts/app-stack bloat | Medium | High | Medium–High |

| Automation overwriting VA edits | Medium | High | Medium–High |

| Vendor lock-in | Medium | Low | Medium |

| Lack of human nuance | High | High | High |

Insight: Automation is fast and scalable, but vulnerable to system-wide failures that can impact hundreds of orders at once. It needs oversight and fallback rules.

Virtual Assistant Risk Heatmap

| Risk Factor | Severity | Frequency | Risk |

| Human error | High | High | High |

| Slow processing times | Medium | Medium | Medium |

| Training requirements | Medium | Medium | Medium |

| Turnover/retraining | High | Medium | High |

| Inconsistent quality | Medium | High | Medium–High |

| Time-zone delays | Medium | High | Medium–High |

| Limited automation skills | Medium | Medium | Medium |

| Insight: VAs add flexibility and judgement, but introduce inconsistency, slower speed, and higher error rates, especially as volume scales. |

Practical Guide: How to Decide for Your Store?

Situational Checklist

- Order volume: Are you processing hundreds or thousands of orders weekly?

- Margin & complexity: Are your SKUs standard, or do you handle multi-supplier, custom products, complex bundles?

- Growth ambition: Are you scaling aggressively or testing a side-hustle?

- Brand/premium vs commodity: Do you compete on USP/brand voice or price/volume?

- Human judgement required: Do you need creativity, strategic product research, nuance in customer service?

- Budget & time: Do you have time to implement automation or prefer ready-to-go human help?

Conclusion

In the battle of Shopify dropshipping automation vs virtual assistants, there’s no one-size-fits-all answer.

If you’re running a small side project, handling a modest number of orders, needing flexibility, and prioritising human-centric tasks, hiring VAs may be the smarter initial investment.

However, if you’re scaling fast, handling hundreds or thousands of orders weekly, aiming for high efficiency, low cost per order, and global reach, then investing in a robust automation stack is the smarter long-term play.

For store owners using Shopify, our brand, Kogents.ai, specialises in building hybrid automation-plus-VA models customized for Shopify dropshipping entrepreneurs.

If you’re ready to scale smarter, please reach out for a customised audit of your workflows.

FAQs

What is Shopify dropshipping automation?

Shopify dropshipping automation refers to using tools and workflows to automatically handle tasks like product imports, inventory updates, order routing, tracking updates, and pricing mark-ups in a Shopify store without human intervention.

How does automated dropshipping work with Shopify?

It works by installing dropshipping automation apps (e.g., AutoDS) in your Shopify store that connect to supplier feeds, monitor inventory and price changes, trigger order fulfilment when a customer orders, and send tracking updates, all via pre-defined workflows.

What are the benefits of automating dropshipping on Shopify?

Key benefits include reduced manual work, faster fulfilment, fewer errors, better scalability, improved margins via auto-pricing rules, and freeing your time for strategy and growth.

Which tasks can be automated in a Shopify dropshipping store?

Tasks include product importation, bulk listing uploads, inventory sync, price mark-up adjustments, order routing to suppliers, tracking number updates, low-stock alerts, returns, or exception routing.

Is Shopify dropshipping automation profitable?

Yes, especially when volume increases. The automation reduces per-order labour cost and errors, enabling you to scale more profitably. The initial setup cost must be justified by volume or margin.

What is a virtual assistant for e-commerce dropshipping stores?

A virtual assistant (VA) is a remote human worker who performs operations tasks such as listing products, processing orders, providing customer support, researching products, and handling exceptions in a dropshipping store.

When is it better to hire VAs instead of relying on automation?

It is better when your order volume is moderate, your business requires human judgement (product selection, brand voice, complex customer service), or you’re at an early stage and want flexibility without heavy upfront automation investment.

What are the drawbacks of relying solely on automation for dropshipping on Shopify?

Drawbacks include setup complexity, dependency on supplier feeds, limited adaptability for exceptions, upfront cost, possible vendor lock-in, and risk of failure if the system isn’t maintained or monitored.

Can I combine automation and virtual assistants in a Shopify dropshipping business?

Absolutely. A hybrid model often delivers the best results: automation handles high-volume repeat tasks; VAs manage creative, exceptional, strategic workload and monitor automation workflows for issues.