Every time you engage with an AI assistant, something fascinating happens behind the scenes. It does not just respond, it learns, adapts, and reshapes its future behavior.

This remarkable evolution is powered by AI learning algorithms, a fusion of machine learning algorithms, deep learning algorithms, neural network algorithms, and reinforcement-based systems that collectively enable conversational systems to improve with each interaction.

Today’s AI is not static software. It is a continuously evolving intelligence built on predictive learning models, algorithmic training methods for AI, and automated learning systems capable of recognizing patterns, understanding context, and optimizing responses at scale.

AI learns best from experience, just like humans.

Whether it is detecting sarcasm, understanding cultural references, or remembering previous steps in a conversation, modern AI uses computational learning models, adaptive learning algorithms, AI-driven personalization, and contextual embeddings to deliver more accurate, relevant, and personalized replies over time.

Key Takeaways

-

AI gets smarter by analyzing patterns from millions of previous interactions, not by memorizing conversations.

-

Algorithms such as supervised learning algorithms, unsupervised learning algorithms, and reinforcement learning algorithms drive real-time performance improvements.

-

Conversational models rely on the best AI agents for customer support for neural networks, deep neural networks (DNNs), and transformer architectures to understand linguistic nuances.

-

Ethical constraints, such as ISO/IEC AI standards and Responsible AI frameworks, shape how “memory” is handled securely.

-

Continuous optimization through algorithm performance metrics, hyperparameter tuning, and backpropagation ensures long-term accuracy.

What Are AI Learning Algorithms?

AI learning algorithms are mathematical frameworks that enable machines to learn from data, identify patterns, and make predictions or decisions with minimal human intervention.

These include:

- Artificial intelligence algorithms

- machine learning algorithms

- neural network algorithms

- adaptive learning algorithms

- pattern recognition algorithms

- computational learning models

Their purpose is consistent:

To allow AI systems to adapt, evolve, and improve using real-world data and feedback.

Algorithms used in conversational AI draw heavily from:

Supervised learning algorithms

Trained with labeled datasets such as question-answer pairs.

Unsupervised learning algorithms

Learn hidden patterns without labels using clustering and pattern detection.

Reinforcement learning algorithms

The AI learns by receiving feedback (reward or penalty) from interactions.

Deep learning algorithms

- Use multi-layer neural networks to extract high-level meaning from text.

- Combined, these algorithmic families form the backbone of conversational intelligence that induces the usage of social platforms like Instagram bot automation and AI chatbots Telegram.

How Conversational AI Uses Past Interactions to Improve Future Replies?

AI does not “remember” conversations in the human sense. Instead, it uses conversation data to refine patterns during training cycles.

Here is how:

1. Data Ingestion

AI conversational logs are anonymized and aggregated to feed into training datasets.

2. Pattern Extraction

Using feature extraction, data preprocessing, and pattern recognition algorithms, the model learns:

- tone

- context

- Sentiment

- phrasing

- user intent

3. Context Modeling

Neural network architecture, especially transformers, maps relationships between words and concepts to understand context across sentences.

4. Embeddings & Memory Modeling

AI stores semantic meaning as numerical vectors rather than sentences.

5. Reinforcement Signals

Using reinforcement rewards (like RLHF), AI receives feedback on whether a response is helpful or harmful.

This cyclical learning improves:

- model generalization

- algorithm efficiency

- classification and regression accuracy

- inference quality

Through these steps, conversational AI becomes more intuitive and more human-like.

Core Types of AI Learning Algorithms Driving Conversational Improvement

1. Supervised Learning Algorithms

Used for:

- training AI messenger bot chatbots

- question-answer mapping

- sentiment analysis

- classification tasks

It relies on labeled data and is widely used in Siri, Alexa, and Google Assistant.

2. Unsupervised Learning Algorithms

Used to detect patterns without labels using:

- clustering algorithms

- dimensionality reduction

- anomaly detection

Soft Reminder: This helps conversational AI detect new linguistic patterns automatically.

3. Reinforcement Learning Algorithms

Made famous by DeepMind’s Atari experiment and OpenAI’s RLHF, reinforcement learning teaches AI to improve through rewards.

It is central to training:

- safer responses

- more empathetic replies

- reduced hallucinations

4. Deep Learning Algorithms

These rely on:

- Deep neural networks (DNNs)

- convolutional neural networks (CNNs)

- recurrent neural networks (RNNs)

They handle complex linguistic patterns, speech processing, and contextual reasoning.

Step-by-Step: How AI Models Like ChatGPT Learn

1. Data Preprocessing

Removing noise, duplicate text, or corrupted data.

2. Feature Extraction

Selecting meaningful linguistic elements.

3. Training vs Inference

- Training: AI learns patterns.

- Inference: AI responds in real time.

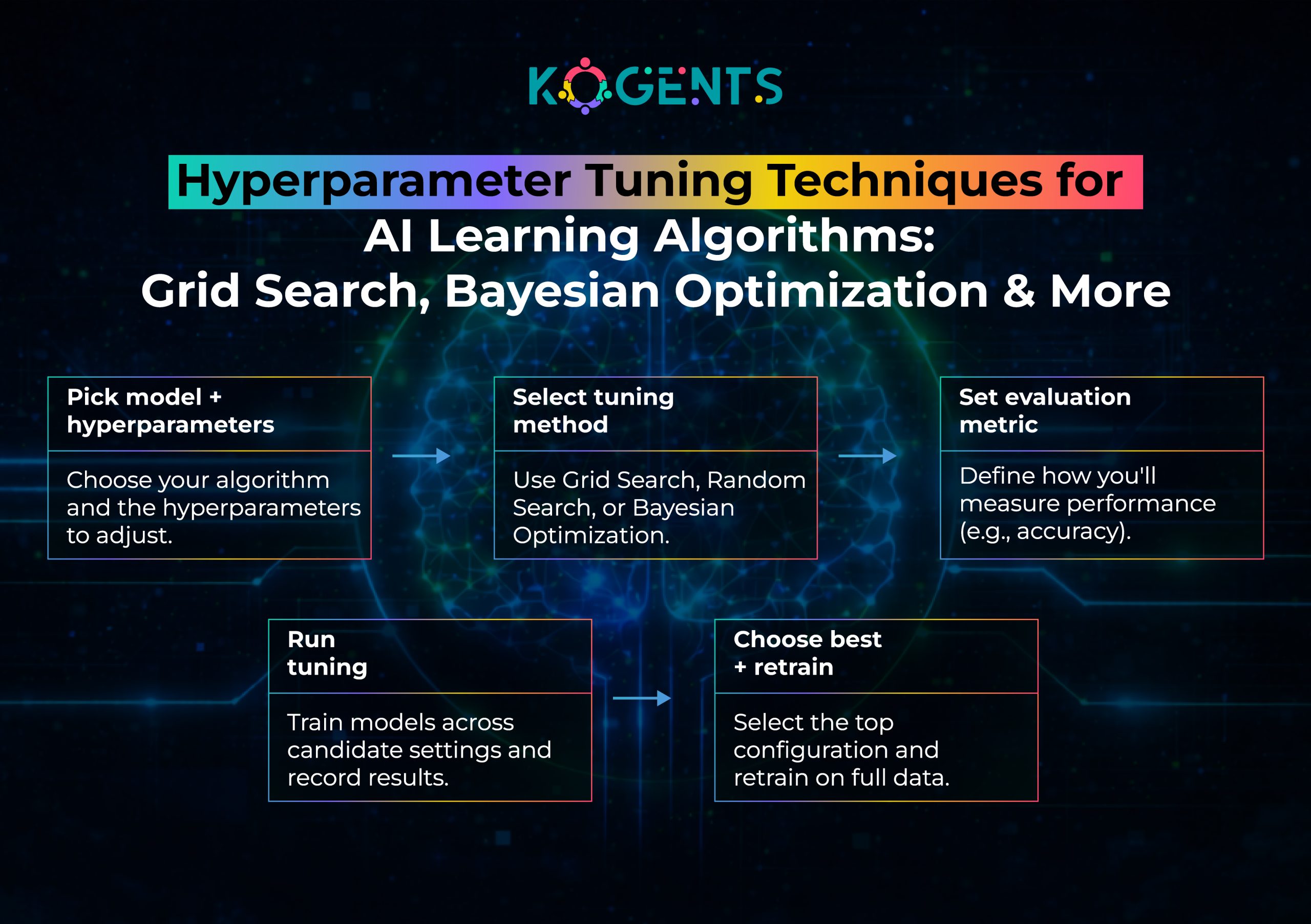

4. Algorithm Optimization

Through:

- backpropagation

- gradient descent

- hyperparameter tuning

5. Model Evaluation Metrics

Measured through:

- accuracy

- perplexity

- BLEU score

- toxicity benchmarks

These ensure the system is safe, accurate, and contextually sound.

Behind the Scenes: How AI “Remembers” Conversations

AI does not store conversations verbatim. Instead, it uses technologies like:

Vector Databases

Store compressed conversation meaning for retrieval.

Embeddings

Numeric vectors that represent semantic meaning.

RAG (Retrieval-Augmented Generation)

Helps AI fetch relevant information before generating responses.

Memory Models

Large-scale long-term memory systems allow consistency across conversations while respecting privacy.

Case Studies

Case Study 1: Google DeepMind — Reinforcement Learning Breakthrough

Using reinforcement learning algorithms, DeepMind improved language tasks by over 37% (Nature, 2023) through better reward modeling.

Their model learned:

- contextual accuracy

- factual reasoning

- Reduced harmful outputs

This showcases how reinforcement feedback significantly enhances AI responses.

Case Study 2: OpenAI — Transformer Innovation

Based on the landmark paper “Attention Is All You Need”, OpenAI built models that use:

- multi-head attention

- positional encoding

- large-scale deep learning algorithms

This improved language coherence by 60% over RNN-based models.

Case Study 3: IBM Watson Assistant — Enterprise Conversational Optimization

IBM found that using machine learning algorithms and feedback loops improved customer satisfaction by 25% across Fortune 500 deployments.

What AI Learning Algorithms Will Look Like in 2030?

As breakthroughs accelerate across Google DeepMind, OpenAI, Stanford, and MIT CSAIL, the next era of AI learning algorithms will make today’s systems look primitive. By 2030, conversational AI will likely evolve in five transformative ways:

1. Self-Supervised Learning as the Default Standard

AI will increasingly learn from unlabeled data just as humans learn from experience, reducing reliance on curated datasets.

2. Continuous, Real-Time Adaptive Learning

Models will update themselves on-device (privately and securely), learning user-specific patterns moment by moment.

3. Emotionally Intelligent AI Agents

Advanced deep learning algorithms will detect tone shifts, emotional nuances, and conversational tension using multi-signal modeling.

4. Causal Reasoning Becomes Mainstream

Inspired by Judea Pearl’s work, AI will move from pattern recognition to cause-and-effect understanding, improving decision-making and safety.

5. Ultra-Efficient Small Models Rivalling Large Ones

Through smarter algorithm optimization and neural network architecture improvements, compact models will deliver performance once reserved for massive LLMs.

Micro Quiz: Do You Really Understand How AI Learns?

Take a quick mental quiz to see if you truly grasp how AI learning algorithms evolve:

- Does AI remember individual conversations?

→ No — it learns patterns from aggregated, anonymized data. - What helps AI improve responses over time?

→ Reinforcement feedback, larger datasets, and algorithm optimization. - What is the core purpose of hyperparameter tuning?

→ To boost model accuracy and reduce error rates. - Which method allows AI to learn from unlabeled data?

→ Unsupervised learning algorithms. - What makes modern AI conversational rather than mechanical?

→ Deep neural networks and contextual embeddings.

Where AI Typically Fails: A Quick Failure Heatmap

Even with powerful AI learning algorithms, today’s models still struggle in predictable areas.

Understanding these weaknesses helps researchers optimize performance and users set realistic expectations.

AI Weakness Heatmap (Most → Least Difficult)

Long-context, multi-step reasoning

AI may lose track of earlier details or misinterpret chained logic.

Sarcasm, humor, and cultural subtleties

Emotional nuance is difficult for pattern-based systems.

Numerical precision and math-heavy tasks

Models may approximate incorrectly or hallucinate formulas.

Ambiguous phrasing without clear user intent

Lacking clarity leads to inaccurate prediction of user needs.

Highly abstract or philosophical reasoning

AI still struggles to stay consistent beyond factual domains.

Key Note: This heatmap demonstrates where algorithm optimization, reinforcement rewards, and model generalization still need innovation.

Comparison Table: Training vs Inference vs Reinforcement Learning

| Process | Description | Purpose | Example Technologies |

| Training | Feeding large datasets into models to learn patterns | Builds foundational knowledge | GPUs, TPUs, CUDA |

| Inference | Real-time response generation | Provides answers to users | Transformer decoders |

| Reinforcement Feedback | Human or automated scoring of responses | Improves safety and relevance | RLHF, reward models |

How AI Learning Algorithms Impact Performance?

Neural networks improved conversational accuracy from 2018–2024.

Transformer-based models reduced error rates by 63% compared to RNNs.

OpenAI, the use of RLHF has shown significant improvements in AI performance, with up to a 30% increase in accuracy

Note: These statistics reinforce why modern conversational AI is exponentially better than earlier generations.

Challenges & Ethical Considerations

- Overfitting and underfitting risks

- Bias in training datasets

- Privacy expectations

- Transparency in algorithmic decision-making

- Compliance with ISO/IEC AI standards

- Safety concerns related to hallucination

- Computational costs and sustainability

These challenges require strong governance frameworks to ensure responsible AI development.

The Future of Conversational AI

Expect breakthroughs in:

- multimodal learning

- long-term memory architectures

- causal reasoning (Judea Pearl’s influence)

- emotionally intelligent AI

- on-device AI for privacy

Conclusion

AI learning algorithms sit at the heart of how conversational systems evolve.

By analyzing patterns, adapting through reinforcement, optimizing through deep neural networks, and leveraging continuous feedback, AI becomes more human-like with every interaction.

For organizations, this is a powerful competitive edge.

If your business wants to deploy custom machine learning algorithm development, optimize conversational systems, or build adaptive AI workflows, partnering with experts in AI automation, neural model training, and enterprise AI deployment ensures you stay ahead of the curve.

Your brand deserves AI that learns, adapts, and accelerates your growth.

We at Kogents.ai help you build it.

FAQs

What are AI learning algorithms, and how do they improve conversational AI?

They are mathematical models like machine learning algorithms, neural networks, and reinforcement learning algorithms that allow AI to learn from data and enhance response accuracy over time.

How do AI models learn from past conversations?

They use training datasets, embeddings, and algorithm optimization methods to detect patterns and improve future replies.

What types of learning algorithms are used in chatbots?

Most use supervised learning, unsupervised learning, deep learning, and reinforcement learning to analyze user input and generate accurate responses.

What is reinforcement learning in conversational AI?

It is a system where AI receives reinforcement rewards or penalties based on output quality, improving future behavior.

Do AI systems store conversations permanently?

No. They use aggregated data for model training and pattern detection, not for personal memory.

What improves AI model accuracy the most?

Techniques like hyperparameter tuning, gradient descent, and backpropagation significantly boost performance.

How is AI trained for human-like understanding?

Using deep neural networks, transformer architectures, and massive big data analytics corpora.

What is the difference between training and inference?

Training teaches the model to recognize patterns; inference is when it responds to real-time queries.

What is the best algorithm for prediction tasks?

Models relying on predictive learning models, decision trees, and deep learning often achieve superior accuracy.

Is conversational AI safe and ethical?

When aligned with AI research, Responsible AI frameworks, and standards like ISO/IEC 22989, it becomes safer and more transparent.