The enterprise world isn’t debating “What can AI do?” anymore.

Instead, the billion-dollar question is:

Can AI do it reliably, repeatedly, and without supervision?

This is where the distinction between AI assistants and AI agents becomes mission-critical.

While assistants enhance thinking, agents enhance doing, transforming workflows into autonomous, self-correcting systems.

As organizations evolve from conversational interfaces to action-taking intelligence, reliability becomes the deciding factor between transformative impact and operational chaos.

This comprehensive guide unpacks everything enterprises need to know about AI Agents vs AI Assistants, backed by research from Stanford, MIT, DeepMind, OpenAI, Microsoft, and real-world case studies.

Key Takeaways

- AI agents vs agentic AI, which helps humans think; AI agents help systems act.

- Reliability, not intelligence, is the hardest challenge in autonomous execution.

- Agents require planners, validators, and execution engines that assistants lack.

- Enterprises implementing agents see measurable ROI, often with more automation lift.

- The future blends both: conversational interfaces + autonomous operational systems.

AI Assistants — The Cognitive Intelligence Layer

AI assistants form the cognitive, conversational layer of enterprise AI systems.

They excel at:

- natural language processing

- contextual understanding

- summarization

- ideation

- information retrieval

- guided decision support

- customer communication

Core technologies include:

- NLP (Natural Language Processing)

- Large Language Models (LLMs)

- conversational AI

- retrieval-augmented generation

- prompt engineering

AI assistants are intentionally non-autonomous.

They support users, not systems, by interpreting language, providing explanations, and enhancing productivity.

AI Agents — The Operational Intelligence Layer

AI agents, in contrast, are autonomous, action-taking AI systems engineered for real-world task execution.

They rely on:

- agent architecture

- autonomous decision loops

- multi-step reasoning

- function calling

- tool-use capability in AI

- event-driven workflows

- reinforcement-learning-inspired strategies

- workflow orchestration

Agents perform:

- cross-system actions

- data entry

- CRM updates

- SaaS tool operations

- email sequences

- database queries

- multi-step workflows

They are the execution layer of AI ecosystems, built not to converse, but to perform.

The Reliability Problem — The True Barrier to Autonomous Systems

Reliability, not reasoning, is the greatest challenge for enterprises.

Stanford’s AI Index notes LLMs vary widely in execution consistency, even with identical prompts.

MIT CSAIL emphasizes that execution credibility is a separate engineering challenge.

Major agent reliability failure sources:

- Hallucinated tool calls

- unverified multi-step plans

- misunderstanding API schemas

- weak validation

- infinite loopsbroken state awareness

- high-confidence incorrect actions

This is why enterprise-grade agents require:

- NIST AI Risk Management Framework

- ISO/IEC 42001 safety governance

- access control

- action auditing

- sandbox execution testing

- rate limits

Without guardrails, agents introduce operational risk; with them, agents become high-value automation engines.

| Capability | AI Assistants | AI Agents |

| Nature | Conversational, cognitive | Autonomous, operational |

| Intelligence Type | LLM reasoning | Agentic decision systems |

| Goal | Support humans | Execute tasks |

| Architecture | Input → Response | Observe → Reason → Plan → Act → Evaluate |

| Tool Use | Limited | Full API/tool invocation |

| Risk | Low | Medium–High |

| Ideal For | Knowledge tasks | Multi-step workflows |

| Examples | ChatGPT, Claude, Copilot | AutoGen, LangChain Agents, Kogents.ai |

The Four Reliability Pillars for Safe Enterprise Deployment

Four pillars determine whether an enterprise agent can operate safely:

1. Deterministic Execution

Agents must behave consistently, regardless of prompt variation.

This requires:

- Deterministic planning loops

- vector-database-backed memory

- schema-validated actions

2. Verified Tool-Use

Incorrect tool invocation is the most common agent failure.

Reliability requires:

- parameter validation

- tool-selection disambiguation

- execution simulation

- forced confirmation logic

3. State Awareness

Agents must understand and retain:

- workflow progress

- system state

- environment signals

- historical actions

This transitions agents from probabilistic generation → state-grounded autonomy.

4. Governance & Compliance

Agents need:

- Role-based access controls

- action logs

- audit trails

- kill-switches

- policy-based action rules

This ensures compliance across GDPR, HIPAA, SOX, PCI DSS, and internal enterprise controls.

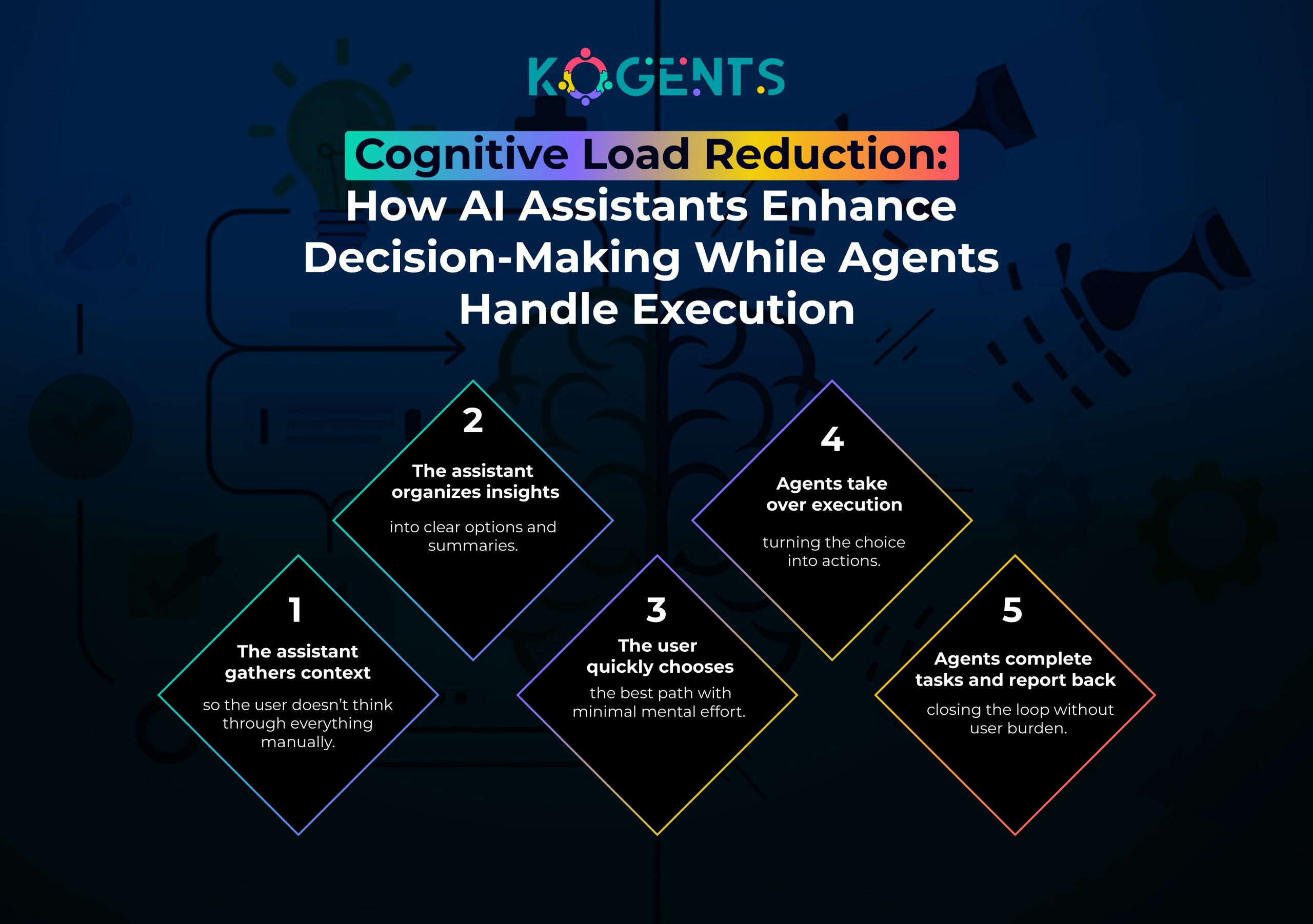

Hybrid Model — When Assistants and Agents Work Together

Enterprises increasingly rely on dual-layer systems:

Assistant Layer = natural language interface

Agent Layer = autonomous operational backbone

- Assistants clarify intent, gather context, and explain next steps.

- Agents execute the workflow, interact with systems, and complete the task.

Together, they deliver:

- stronger reliability

- higher interpretability

- faster task completion

- safer execution

The Hidden Cost of Choosing the Wrong System

Choosing incorrectly between agents and assistants creates unseen enterprise costs.

1. Over-Automation Risk

Choosing AI agents vs workflows for subjective or human-judgment-driven workflows leads to:

- erroneous decisions

- unauthorized changes

- compliance breaches

2. Under-Automation Risk

Using assistants instead of agents causes:

- human bottlenecks

- limited scalability

- poor automation ROI

3. Integration Debt

Agents require multi-system orchestration; misaligned architecture causes:

- multi-month delays

- expensive rebuilds

- stalled pilots

4. Compliance Exposure

- Agents without governance increase risk across regulated industries.

- This section breaks new ground by addressing the organizational cost of incorrect AI selection.

The Cognitive vs Executive Divide — A Breakthrough Concept

Most organizations mistakenly treat assistants and agents as interchangeable.

But the divide is structural:

Cognitive Layer (Assistants)

Acts as the enterprise brain:

- interprets intent

- analyzes information

- generates insights

Executive Layer (Agents)

Acts as the enterprise body:

- executes actions

- interacts with systems

- updates data

- monitors workflows

Aligning layers ensures:

- clarity

- accuracy

- reliability

- operational safety

This conceptual model is rarely covered but critical for AI maturity.

Agent Failure Modes (The Real World Issues No One Talks About)

Understanding failure modes enables system-hardening.

1. Action Mismatch

The agent selects the incorrect tool/action.

2. State Drift

Loses track of workflow progression.

3. Reasoning Loops

Gets stuck attempting to perfect reasoning.

4. Schema Misinterpretation

Misreads the API or database schema.

5. Premature Termination

Ends workflow due to misunderstood success conditions.

6. Permission Overreach

- Attempts restricted operations.

- Identifying these upfront dramatically increases trust and stability.

AI Execution Risk Scoring — The Missing Framework for Safe Autonomous Agents

As enterprises adopt autonomous agents, the biggest gap isn’t in tooling or orchestration; it’s in the absence of a predictive framework that estimates the risk of each agent decision before execution happens.

This is where AI Execution Risk Scoring (AERS) becomes a crucial addition to enterprise AI maturity.

AERS evaluates every planned action using four quantifiable parameters:

1. Action Sensitivity Score

Measures the consequence of the planned action:

- Low (UI click, data fetch)

- Medium (record update, workflow trigger)

- High (delete, financial transfer, compliance-impacting execution)

Agents adjust caution levels dynamically based on sensitivity.

2. Confidence Threshold Score

Assesses how certain the agent is about:

- tool selection

- parameter mapping

- outcome predictability

Low-confidence actions trigger human-in-the-loop review.

3. System Dependency Score

Rate how many systems will be affected downstream:

- Single system → low dependency

- Multi-system cascade → high dependency

Prevents agents from creating “automation domino effects.”

4. Compliance Exposure Score

Evaluates legal and regulatory risk:

- GDPR data access

- HIPAA PHI exposure

- Financial reporting impact

- SOX or PCI implications

Agents use this score to determine if they need supervisory approval.

Used Case Studies

1. Siemens – Autonomous Factory Agents

Siemens used multi-agent decision systems for dynamic scheduling, predictive maintenance, and supply chain signaling.

Outcome: 20% reduction in downtime.

2. Mayo Clinic – Clinical Workflow Agents

Mayo used Agentic task orchestration for triaging, routing, and EMR updates.

Outcome: 30% faster clinical workflow throughput.

3. UPS – Route Optimization Agents (ORION Project)

ORION Project: Multi-agent optimization for delivery routing and traffic modeling.

Outcome: Saved 10+ million gallons of fuel annually.

4. ING Bank – Risk Surveillance Agents

ING Bank induced Agents monitor fraud, transaction patterns, and credit anomalies.

Outcome: 40% reduction in manual review volume.

5. Boeing – Predictive Maintenance Agents

In Boeing, a Multi-agent workflow orchestrates part replacement, inspections, and diagnostics.

Outcome: 33% less unplanned maintenance.

The Era of Autonomous Execution Has Begun

Understanding AI Agents vs AI Assistants is no longer a technical preference; it’s an enterprise strategy.

Assistants elevate cognition; agents elevate execution.

Together, they will define the operational fabric of the next decade.

Organizations that deploy agents with governance, state-awareness, and deterministic execution will outperform competitors across automation, cost efficiency, and innovation.

Build reliable, production-ready Agents with Kogents.ai because of its credibility as the best agentic AI company in your region.

If you want enterprise-grade AI agents with validated tool-use, safe orchestration, and multi-step execution pipelines,

Explore our website, designed for safe, governed, auditable, and scalable agentic automation.

FAQs

How do AI agents ensure actions are correct before execution?

Agents use validation pipelines that check parameters, simulate execution, ensure data integrity, and prevent high-risk operations. Many enterprises also add human approval layers for destructive actions (deletes, financial transfers).

Can AI assistants evolve into agents automatically?

No—assistants need additional architecture: execution engines, validators, environment understanding, and tool integration. Without these layers, an assistant remains conversational.

What makes agent reliability harder than assistant reliability?

Assistants generate text; agents manipulate systems. The consequences of agent errors are operationally significant—affecting databases, workflows, and customers.

How do multi-agent systems improve accuracy?

They break responsibilities into planners, executors, validators, and reviewers, mirroring human team roles. Research from Microsoft AutoGen shows a 15–25% improvement in overall task accuracy.

What is “environment grounding” in agent systems?

It’s the technique of giving agents real-time knowledge of system state, reducing hallucinated actions, and providing deterministic execution paths.

Are agents suitable for highly regulated industries?

Yes, if deployed with compliance controls, audit trails, encrypted action logs, and strict access governance—as required by HIPAA, SOX, GDPR, and NIST.

What training is required for teams to adopt agentic automation?

Teams must understand workflow mapping, action constraints, exception handling, and prompt structuring. Many companies start with low-risk pilot workflows first.

How do vector databases improve agent accuracy and planning?

They act as memory banks where agents retrieve procedural instructions, examples, business rules, and previous outcomes—enabling consistency and reducing planning drift.

Are agents more expensive to run than assistants?

Agents consume more compute because they process multiple steps, run validations, and call APIs. However, the efficiency gains (automation lift) typically outweigh the cost.

How can enterprises prevent agent “overreach”?

By using strict RBAC permissions, action allowlists, execution throttles, human approval layers, and environment-level constraints that prevent unauthorized operations.